CSV data/field validation using Power Automate & Parse JSON

By default, Power Automate does not come with a way to validate the content of a field coming through your flows, besides for validating JSON payloads using the Parse JSON action.

You could create a loop with conditions and evaluate each of your rules one at a time, but this is very inefficient. This method would create thousands of flow action runs if provided with a large enough dataset, causing significant cost to users on pay as you go plans or limited licences.

But Parse JSON is a lot more powerful than initially thought - and it supports nearly the whole JSON schema specification as an input, allowing you to validate everything from the data type to allowed values in a column to full if-this-then-that rules. Additionally, it can process up to a 100 MB JSON payload in a single flow action.

In this tutorial, I will demonstrate how Parse JSON can be used to validate the content of the fields on a CSV file, including converting the CSV sheet to JSON for evaluation.

A few caveats before we begin:

- This functionality only works on files with a fixed format. If the format changes significantly between files, then it cannot be evaluated using this method, and you may need something more than Power Automate.

- Extending this out to several evaluations may hit Power Automate’s data transfer limits with large files.

- Multiple rules can be evaluated at once, but the errors may be cryptic to an end user.

Setting up the Data Transformation Process

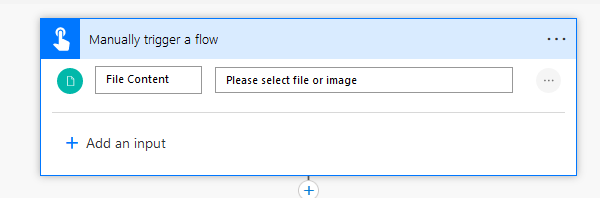

This demo configuration uses an instant trigger with a single file to be uploaded. Other sources, such as Dataverse or OneDrive, may require other flow actions.

When a file is first uploaded to Power Automate, it will be in base64 format as a large string. We first need to add a Data Transformation Compose action to decode the string back to the original CSV contents. This is done using the base64ToString function. In this case, I used the expression

base64ToString(triggerBody()['file']['contentBytes']) Change this as required for your trigger and input actions.

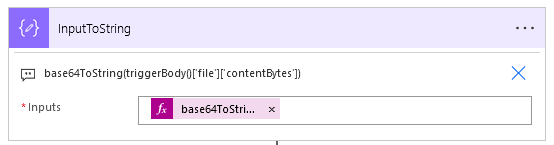

Once the string is decoded, we use another Compose action to take the string to a set of arrays. CSVs use different line endings depending on what editor was last used to prepare the file. As this CSV was edited on Windows using Excel, the line endings are in Windows format, so our split function will use decodeUriComponent('%0D%0A'). For Unix line endings (aka macOS or Linux users), you need to use decodeUriComponent('%0A'). In this case, I renamed the last function to “InputToString”, so the formula for the Compose action is:

split(outputs('InputToString'), decodeUriComponent('%0D%0A'))

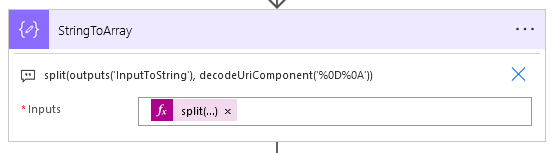

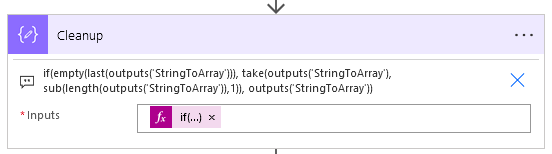

Finally, some input files may have a blank line that will fail being validated through the process. As a result, we’ll need to remove the empty line. This can be done using yet another Compose action, this time with the expression

if(empty(last(outputs('StringToArray'))), take(outputs('StringToArray'), sub(length(outputs('StringToArray')),1)), outputs('StringToArray')) In this case, I renamed the last function to “StringToArray” to make the expression easier to read.

Transforming the CSV to JSON

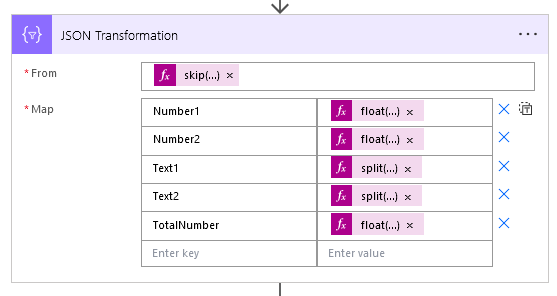

We will be using the Data Transformation Select action to transform the arrays with the CSV into a JSON format.

In the “From” field, input skip(outputs('Cleanup'),1), where the last function was named “Cleanup”.

In this case, I will manually specify headers instead of using the headers from the file. If you wish to use the headers from the file, create another Compose action before this and input split(first(outputs('Cleanup')),','), then access each of the items in the output array by using outputs('Put_Compose_Action_Name_Here')[0], where 0 is the index you wish to access.

For each field, you will need to access the index in the array where the data is located. For example, the first column’s data will be index 0, the second column’s data will be index 1, and so on. Access is performed using the split function:

split(item(),',')?[0] If the field is numeric, you will need to convert the text to a float in order for it to be numeric in the JSON. This can be done by putting a float() function around the split(), like:

float(split(item(),',')?[0]) For my 5 fields, the complete Select function looks like this:

Validating the JSON

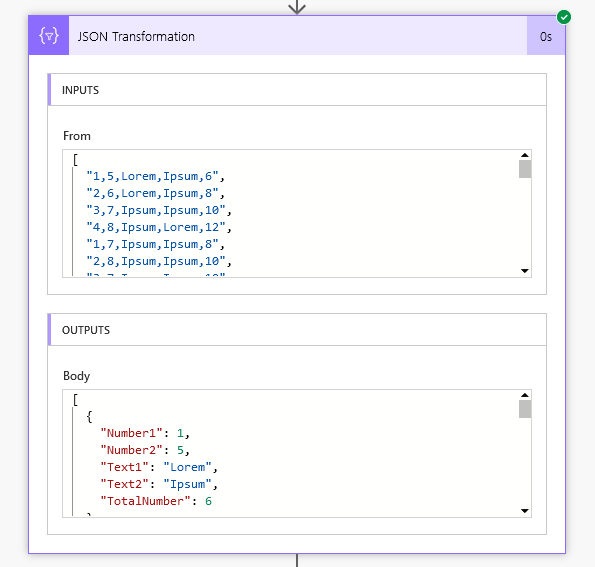

Now that the hard part is out of the way, run your function. You’ll need an example JSON to continue on with this part.

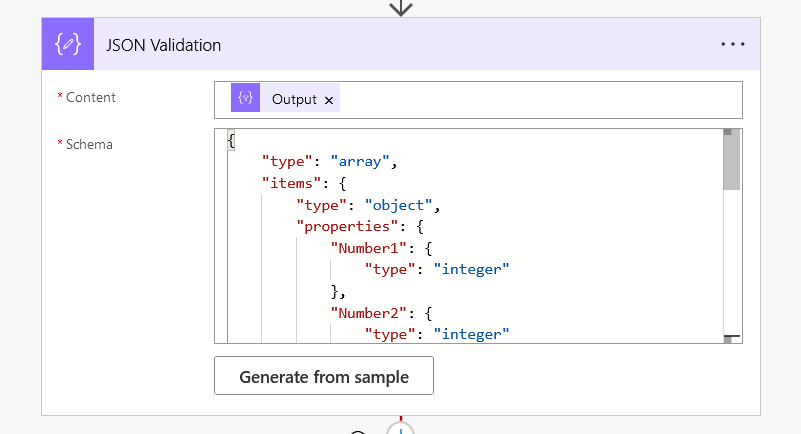

Copy the results from the Outputs Body field. Go back into the Power Automate editor and create a new Data Transformation Parse JSON action. Click “Generate from Sample”, paste in your JSON result, then click Done. Copy the generated schema.

This is your base schema with no rules, so let’s add a few rules. For information on the JSON Schema specification, I recommend the Understanding JSON Schema web-book. For testing your schema, I recommend using the Newtonsoft JSON Schema Validator tool.

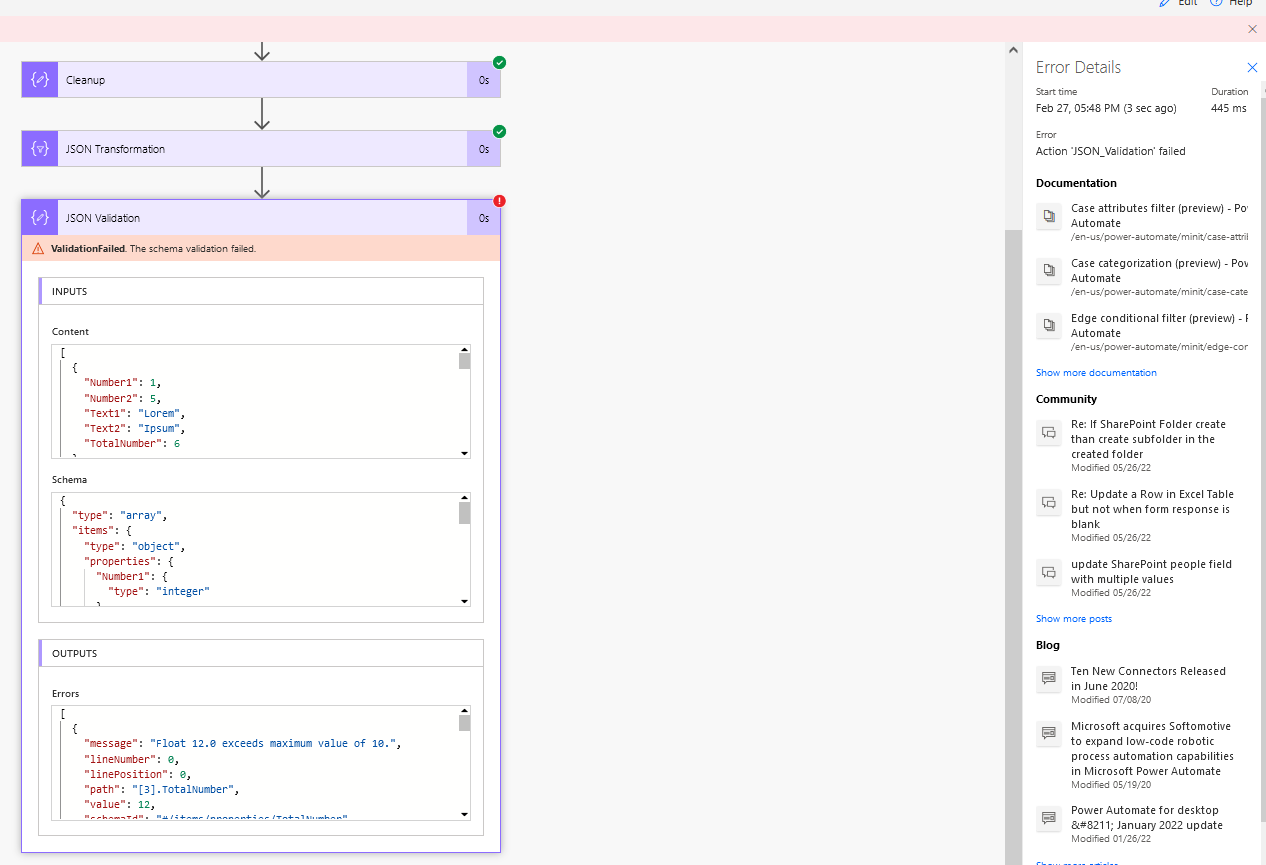

In this case, I have written three rules: filtering the allowed values on Text1 and Text2 using an enum field, and filtering the range of numbers allowed in TotalNumber.

My schema looks like this:

{

"type": "array",

"items": {

"type": "object",

"properties": {

"Number1": {

"type": "integer"

},

"Number2": {

"type": "integer"

},

"Text1": {

"type": "string",

"enum": ["Lorem", "Ipsum"]

},

"Text2": {

"type": "string",

"enum": ["Ipsum"]

},

"TotalNumber": {

"type": "integer",

"minimum": 2,

"maximum": 10

}

},

"required": [

"Number1",

"Number2",

"Text1",

"Text2",

"TotalNumber"

]

}

}

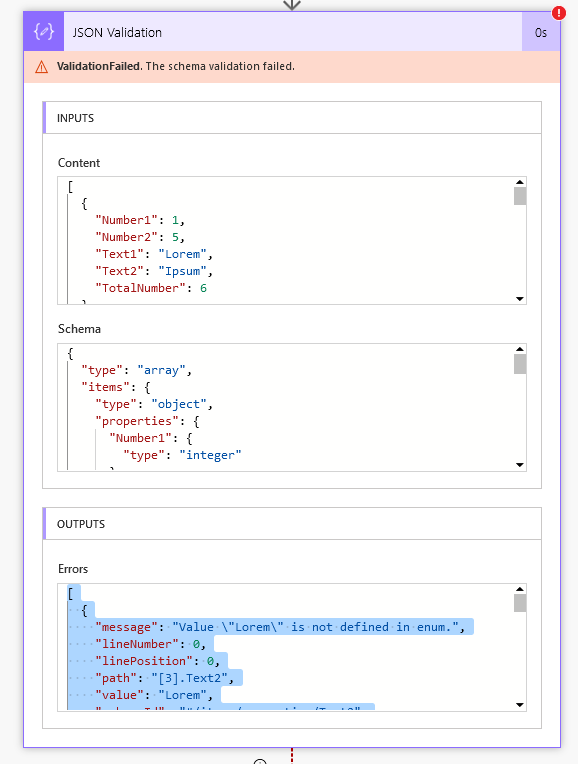

Let’s run the function with a test file with 100 rows, with a few rows of invalid data. The validation failed! In the errors field it has an array of all the errors gathered during the validation, which includes both the value that failed, a reason why, and the location of the failed value. Remember, the values in the path are for an index starting from 0.

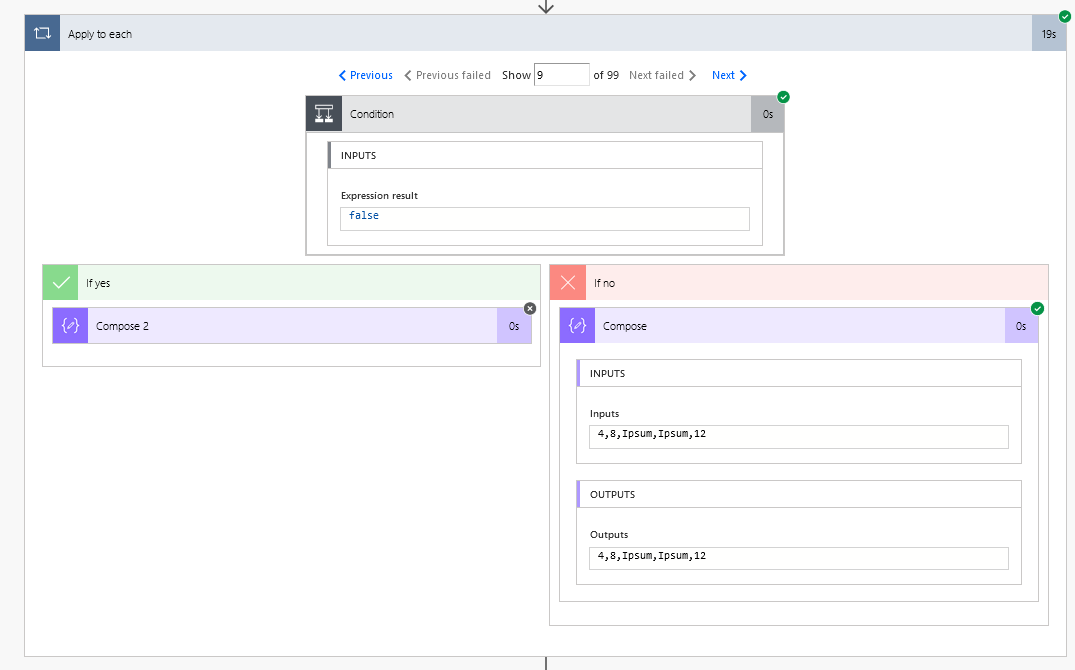

Benchmarks vs other methods

The test data is 99 rows with 3 number and 2 text columns. One column is tested to make sure it is equal to or less than 10.

Using a loop and a condition, it came out to 19 seconds. This function would have used around 200 flow actions.

Using Parse JSON, it came out to 445 ms. This used a single flow action.

Packaged tutorial flow

I’ve packaged this entire demo flow up into a Scope so it can be copied into your own flows. Simply copy the text in the block below, then go to add a new action, go to the “My clipboard” tab, hit Ctrl-V (or Command-V for Mac users) and the scope will appear. Make sure you are using the “Manually trigger a flow” trigger with a single file attachment, or the first stage will not work and the flow will fail.

{"id":"71cca431-d8a8-4b4f-8023-0f0b08cedaad","brandColor":"#8C3900","connectionReferences":{},"connectorDisplayName":"Control","icon":"data:image/svg+xml;base64,PHN2ZyB3aWR0aD0iMzIiIGhlaWdodD0iMzIiIHZlcnNpb249IjEuMSIgdmlld0JveD0iMCAwIDMyIDMyIiB4bWxucz0iaHR0cDovL3d3dy53My5vcmcvMjAwMC9zdmciPg0KIDxwYXRoIGQ9Im0wIDBoMzJ2MzJoLTMyeiIgZmlsbD0iIzhDMzkwMCIvPg0KIDxwYXRoIGQ9Im04IDEwaDE2djEyaC0xNnptMTUgMTF2LTEwaC0xNHYxMHptLTItOHY2aC0xMHYtNnptLTEgNXYtNGgtOHY0eiIgZmlsbD0iI2ZmZiIvPg0KPC9zdmc+DQo=","isTrigger":false,"operationName":"CSV_to_JSON_+_Validation","operationDefinition":{"type":"Scope","actions":{"InputToString":{"type":"Compose","inputs":"@base64ToString(triggerBody()['file']['contentBytes'])","runAfter":{},"description":"base64ToString(triggerBody()['file']['contentBytes'])","metadata":{"operationMetadataId":"53f8f8c3-159d-4234-a7e1-d8800609accf"}},"StringToArray":{"type":"Compose","inputs":"@split(outputs('InputToString'), decodeUriComponent('%0D%0A'))","runAfter":{"InputToString":["Succeeded"]},"description":"split(outputs('InputToString'), decodeUriComponent('%0D%0A'))","metadata":{"operationMetadataId":"630828d1-5737-43f8-a267-16228ff45c43"}},"Cleanup":{"type":"Compose","inputs":"@if(empty(last(outputs('StringToArray'))), take(outputs('StringToArray'), sub(length(outputs('StringToArray')),1)), outputs('StringToArray'))","runAfter":{"StringToArray":["Succeeded"]},"description":"if(empty(last(outputs('StringToArray'))), take(outputs('StringToArray'), sub(length(outputs('StringToArray')),1)), outputs('StringToArray'))","metadata":{"operationMetadataId":"3bb39ef0-c0a4-4b3d-bd58-7ae25cc245ff"}},"JSON_Transformation":{"type":"Select","inputs":{"from":"@skip(outputs('Cleanup'),1)","select":{"Number1":"@float(split(item(),',')?[0])","Number2":"@float(split(item(),',')?[1])","Text1":"@split(item(),',')?[2]","Text2":"@split(item(),',')?[3]","TotalNumber":"@float(split(item(),',')?[4])"}},"runAfter":{"Cleanup":["Succeeded"]},"metadata":{"operationMetadataId":"62585044-dd0b-4c38-b68c-60788fa5026f"}},"JSON_Validation":{"type":"ParseJson","inputs":{"content":"@body('JSON_Transformation')","schema":{"type":"array","items":{"type":"object","properties":{"Number1":{"type":"integer"},"Number2":{"type":"integer"},"Text1":{"type":"string","enum":["Lorem","Ipsum"]},"Text2":{"type":"string","enum":["Ipsum"]},"TotalNumber":{"type":"integer","minimum":2,"maximum":10}},"required":["Number1","Number2","Text1","Text2","TotalNumber"]}}},"runAfter":{"JSON_Transformation":["Succeeded"]},"metadata":{"operationMetadataId":"ebbf5079-3b4c-4afc-8c5a-9e3ceee51ccb"}},"Error_Formatting":{"type":"Select","inputs":{"from":"@outputs('JSON_Validation')['errors']","select":{"ErrorMsg":"@item()['message']","ErrorPath":"@item()['path']","Value":"@item()['value']"}},"runAfter":{"JSON_Validation":["Failed"]},"metadata":{"operationMetadataId":"f6b91697-f331-44e8-8fd5-6a0edbcac62e"}}},"runAfter":{},"metadata":{"operationMetadataId":"9d471e42-89a3-4e60-aa47-12545846ed46"}}} Additionally, here are the testing CSVs I used during this post.

Hope this helped! Please reach out or comment below if you need any help.